When the orchard robots arrive, it would be a waste of technology if they only handled harvest.

Once you have an autonomous vehicle adapted for orchards — equipped with canopy-analyzing sensors and machine-learning computers — growers should be able to use it all season long by changing the tool on the end of a robotic arm. A cutter for winter pruning swaps for a string thinner at bloom and then a fruit grabber for harvest.

That’s the vision, anyway, according to Washington State University biological engineering professor Manoj Karkee, who is involved in a variety of orchard robotics research projects. They include a robotic pruning collaboration with Oregon State University and Carnegie Mellon University that builds on the same framework of technology that underlies robotic harvest.

“We are developing vision systems for understanding the canopy,” he said. “Understanding canopies, what is their geometry, these pieces of information will be very useful in applications such as training and pruning.”

It’s not the industry’s first pass at automated pruning.

A federal grant-funded project from 2011 to 2014, led by Purdue University and Penn State University, resulted in a robot-friendly mathematical protocol for pruning decisions, but not commercial robots.

Amy Tabb, an agricultural engineer at the U.S. Department of Agriculture’s Appalachian Fruit Research Station in West Virginia, has also developed computer vision programs for tree architecture analysis. She said it presents a surprisingly difficult challenge for computer vision to see and recreate trees in three dimensions, let alone for robots to maneuver in 3D canopies.

“The perception problem is a lot easier in the 2D canopy” than the tall spindle systems she was working in, she said. “Either your system is going to be slow or you have to change the canopy.”

Create the canopy robots need

The new WSU-OSU-CMU collaboration — funded by the Washington Tree Fruit Research Commission — has the advantage of working in more 2D orchards.

“That’s why we like working in fruiting wall orchards and vineyards,” said George Kantor, a Carnegie Mellon robotics professor. He and project scientist Abhi Silwal, who earned his doctorate at WSU, working with Karkee, have developed robust camera systems to analyze the canopy in variable light conditions.

Silwal said robotic pruning may actually give orchards a more consistent, uniform structure that lends itself better to mechanized harvest.

They worked with Karkee’s group to record human pruners working, to see if the machines could learn the art of pruning from people.

“We found out they were actually really inconsistent in terms of following the pruning rules,” Silwal said. “They don’t have time to accurately measure what 6 inches is, they estimate. And that varies from pruner one to pruner two.”

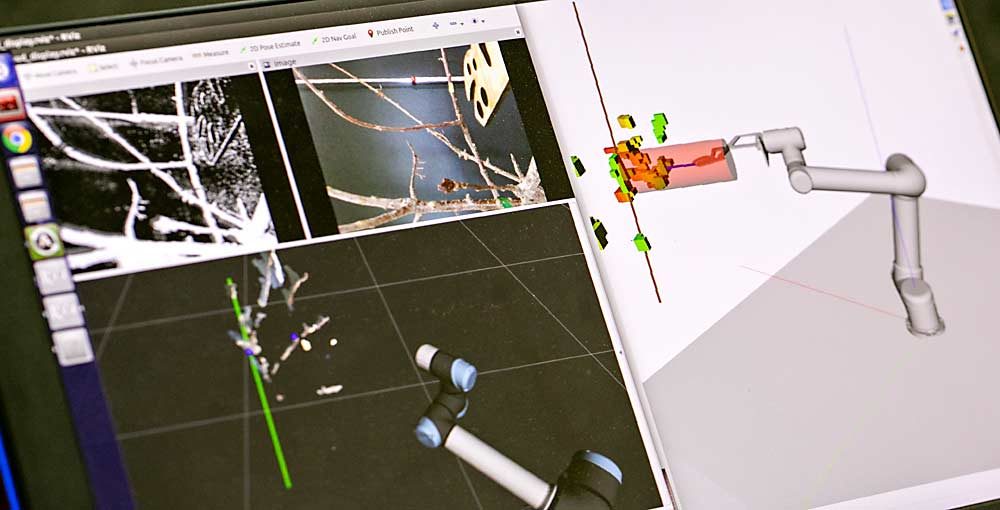

The AI, on the other hand, measures diameters and distances and counts buds before it makes each and every decision — in almost real time.

“We can optimize cut point locations so, in the growing season, the apples are placed in roughly equally spaced locations,” Silwal said.

The algorithms can do that, but next the team needs to prove that a mechanical pruner on a robotic arm, under development by Joe Davidson’s lab at OSU, is capable of turning that analysis into effective, efficient action.

Robotic building blocks

The field of orchard robotics appears to be moving slowly — from the perspective of growers waiting for commercialized tools — but actually great progress has been made on the building blocks that need to come together to solve complex orchard automation needs, said Kantor, when he spoke at the Washington State Tree Fruit Association’s Annual Meeting in December.

“There are three fundamental capabilities: mobility, vision and interaction,” Kantor said. A robot’s successful work in an orchard requires all three fields of engineering.

Autonomous mobility is here, he said, although companies are still figuring out how to sell it to growers in useful ways. Computer vision technology has made great strides as well, due to advanced machine-learning approaches.

“Today, if you can manage to see something in an image, we can train a computer to do it,” he said. “So, we can drive around and see things, and there’s a lot of value in driving around and seeing things, but at some point, we want to do something.”

Engineers call doing something manipulation, and it’s the hardest part because it requires the other technology first and because it requires complex mechanical equipment that takes time to build, test and improve, Kantor said, adding that some manipulation, such as pruning, might be easier than the Holy Grail of harvesting.

Chicken or egg?

Whether robotic pruning poses an easier engineering problem than apple harvest, or a more challenging one, depends on which collaborator you ask.

From an AI perspective, pruning is more complex, Karkee said, because the system needs to generate a full 3D structure of each tree — down to each branch diameter — to decide which branches to remove.

“We have to make canopy or tree-level decisions, versus we can pick one apple at a time with harvesting,” he said. Also, it’s a lot easier to detect big, red, round apples. “For dormant pruning, we have objects that are not well colored, with smaller diameters, so we need more resolution and higher computational capabilities.”

But from the robotic manipulation perspective, pruning presents fewer challenges, Kantor and Silwal said.

“When you pick apples, you have to go so fast and you have to be perfect, because the fruit are easily damaged,” Kantor said. “You don’t have to worry about damaging pruning.”

Plus, there’s a longer window of time to get dormant pruning work done, so robots might not need to work as fast as during harvest, Silwal said.

This winter marks the first attempt at putting all the pieces of the research together — computer vision, analysis and robotic arm — but they stress that it’s still proof-of-concept. Whether the technology becomes viable to growers depends on the effectiveness they can reach and the cost to do so, Kantor and Karkee said.

“The robots are not there yet, it’s not commercially viable yet, but the data can be used to make farming more efficient,” Karkee said. “In the long run, this all fits quite well together into developing smart agricultural systems.” •

—by Kate Prengaman

Related:

—Support the tech pipeline — Video

Correction:

This article has been updated to correct the computer architecture projects Amy Tabb conducted.

Leave A Comment