As Will Beightol slowly drove through a vineyard in early June, a box the size of a microwave mounted to his ATV snapped thousands of pictures.

Known as the Flash, the box is a crop estimation sensor developed by Carnegie Mellon University engineers. Startup Bloomfield Robotics spun off from their research to commercialize a piece of technology the wine industry anxiously awaits.

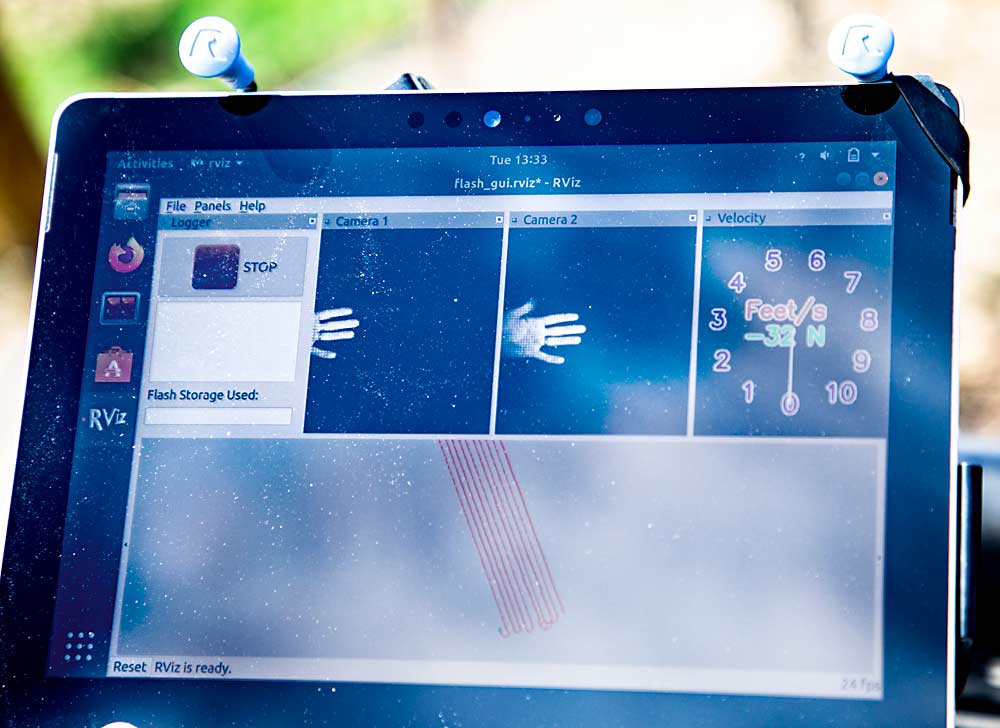

Twice a week, Beightol creeps through six blocks at vineyards in the Horse Heaven Hills American Viticultural Area south of Prosser, Washington, as the Flash takes pictures of the fruiting zone, illuminating each frame with powerful strobes. The idea is to make crop estimations, a critical component of wine quality and grower contracts, more efficient, accurate and objective, said Beightol, owner of Collab Wine Co., a wine industry consulting and management firm.

Bloomfield Robotics, which formed in 2018, has two products so far: the Blink and the Flash. The Blink is a handheld microscope with a liquid camera lens that’s designed to take 10 different images from 10 different focal planes at once. It could count and measure the size, density and color of trichomes on a cannabis plant, for example.

The Flash makes more sense for the fruit industry. It uses two cameras that shoot a combined 10 frames per second. From the middle of a vine row alley, traveling at up to 40 miles per hour, it shoots images of grape clusters used to analyze size and color. It also would show leaf yellowing or other visible traits on the plants.

Both systems use algorithms called deep learning, in which the computer “learns” about an object the more it “sees” that object, said Mark DeSantis, Bloomfield CEO.

The company has seven Flash cameras; three of them are in trials in vineyards in New York, California and Washington.

DeSantis aims to make the cameras affordable enough for each grower to own one. The company would charge a monthly fee for the service and to spread out the cost of the equipment, much like a cellular phone plan for a new phone. He declined to reveal price estimates.

Similar technology for assessing apple crop load from United Kingdom-based Intelligent Fruit Vision debuted a few years ago, with Washington Tractor as the U.S. distributor, and several research groups are looking at the possibility of using smartphone cameras to collect similar data with a lower-cost sampling approach, rather than scanning each row.

The technology to capture the data is there, DeSantis said, and the race is on to see who can best turn the sensor insights into actionable information.

Getting closer

Automating crop estimation with precision sensors has long been a goal of the grape industry.

“That’s like the Holy Grail of precision viticulture right now,” said Melissa Hansen, research program director for the Washington Wine Commission, which in late June awarded Beightol a grant to help him broaden the scope of his trials this year.

The technology is promising but still not accurate enough for commercial use, Hansen said. Like with any vision system, branches and leaves sometimes get in the way. Robotic apple harvester prototypes have the same problem.

Vineyard imaging systems were a component of a research project of Cornell University and Carnegie Mellon, funded at $6.2 million over four years by the National Grape Research Alliance and the Specialty Crop Research Initiative.

In the Cornell project, dubbed “The Efficient Vineyard,” the imaging prototype cranked out geo-referenced visible berry counts across a vineyard block, said Terry Bates of the Cornell Lake Erie Research and Extension Laboratory. But the key word is “visible,” Bates said. It only can count berries within the camera’s field of view, which was limited in the Concord blocks where they first tested it.

“It accurately counts what it can see but does not have ‘X-ray vision’ to see berries occluded by leaves, stems (or) other berries,” Bates said.

That’s where the grower comes in, said George Kantor, the Carnegie Mellon researcher who led the development of the technology and co-founded Bloomfield. His research team is looking for ways to sense occluded fruit, but for now, the system doesn’t need to see all the berries to work in consistently managed vineyards. Growers must count a few sample vines by hand and plug that data into the computer so it can develop a ratio of “visible” to “invisible” fruit for yield estimations.

With this calibration, his team has estimated the system makes consistently accurate yield estimations in table and wine grapes when exposed to at least 10 percent of fruit. More visible fruit doesn’t significantly improve the estimates, he said.

Data transfer is another challenge, Kantor said. The camera collects a terabyte of photos in just a few hours. With most Wi-Fi networks, the grower would get faster results by shipping a hard drive to Pittsburgh than trying to upload that much to Bloomfield’s cloud, he said. The current version of the camera includes an imbedded computer to process data from those photos in the field, so only the analysis needs to be transferred.

The machine is closest to commercialization in wine grapes, but Bloomfield hopes to use it in a variety of specialty crops, including apples.

“Eventually, we want to look at everything,” Kantor said.

Currently, wine grape crop estimation relies on harvesting clusters from about 20 vines per block throughout July, counting the grapes, weighing them and entering the data into an equation that spits out a best guess of the coming volume. It’s a right-of-passage for part-time employees and interns. Some growers have become quite accurate at it over the years, said Beightol, who grew up in the Horse Heaven Hills wine industry.

However, if the technology works as promised, the Flash would be even more accurate and objective because it would collect a much larger sample size. By driving down every fourth row, it could sample 225 plants per acre.

It could also yield earlier results, which could save money in thinning and allow winemakers and growers to adjust sooner. For example, last year’s crop was hit by a fall frost, said Beightol.

“If the crop load was more dialed in earlier in the season, you may be able to push up that harvest date,” Beightol said. •

—by Ross Courtney

Related:

—Canopy camera in your pocket – Video

—Support the tech pipeline — Video

—Computer vision systems can count apples and provide a new perspective on crop load

—Outthinking technology

Leave A Comment